Introduction to optimization

Optimization is a mathematical concept and process that involves finding the best possible solution to a problem or maximizing the value of a given metric. In various fields such as mathematics, engineering, economics, and computer science, optimization is a fundamental tool used to make decisions and solve complex problems efficiently.

The main goal of optimization is to find the optimal solution, which is the most favorable outcome among a set of possible solutions or choices. This could involve maximizing profits, minimizing costs, improving efficiency, or achieving the highest performance.

The optimization process typically involves defining the objective function, which represents the quantity to be optimized, as well as the constraints that must be satisfied. The objective function can be a mathematical expression or a performance metric that measures the quality of a solution. Constraints, on the other hand, are conditions that must be met in order for a solution to be feasible.

Various optimization algorithms and techniques are used to find the optimal solution. These include gradient-based methods, evolutionary algorithms, linear programming, integer programming, and dynamic programming, among others. These algorithms iteratively explore the solution space to search for the optimal solution, making adjustments and improvements until the best solution is found.

Optimization is applied in a wide range of fields. For example, in engineering, it can be used to design efficient structures or optimize the performance of complex systems. In economics, optimization is used to maximize profits or allocate resources efficiently. In computer science, optimization is used in algorithm design and computation to improve efficiency and speed.

Overall, optimization is a powerful tool that allows for the effective and efficient decision-making process. By finding the best solution among a set of alternatives, optimization helps businesses, researchers, and individuals achieve their goals and objectives.

Definition and concept of optimization in mathematics

In mathematics, optimization refers to the process of finding the best possible solution among all feasible alternatives. It involves the maximization or minimization of a certain quantity, called the objective function, while satisfying a set of constraints.

The concept of optimization is utilized to solve various real-life problems across different fields, such as economics, engineering, computer science, and operations research. These problems can range from determining the most efficient production plan for a company to finding the shortest route for a delivery truck.

Mathematical optimization typically involves formulating the problem as a mathematical model, which consists of an objective function and a set of constraints. The objective function represents the quantity to be optimized, and the constraints define the limitations or conditions that must be satisfied.

Optimization techniques employ mathematical algorithms and algorithms to search for the best solution within the feasible region defined by the constraints. These techniques can be divided into two main categories: deterministic and stochastic optimization. Deterministic methods guarantee finding the optimal solution, while stochastic methods provide an approximation due to uncertainty or randomness in the problem.

Common methods used for optimization include linear programming, nonlinear programming, dynamic programming, and quadratic programming, among others. These methods use mathematical tools such as calculus, linear algebra, and optimization theory to solve the optimization problems and find the optimal solution.

Overall, optimization in mathematics plays a crucial role in solving complex problems by finding the most optimal solution that meets the given requirements and constraints. It helps in decision-making, resource allocation, and maximizing efficiency in various industries and disciplines.

Types and methods of optimization

Optimization refers to the process of making something as effective, efficient, or functional as possible. It involves finding the best possible solution within a given set of constraints or objectives. There are various types and methods of optimization, including:

1. Continuous Optimization: Continuous optimization deals with finding the best solution within a continuous domain, where the variables can have any real value. It is commonly used in mathematical programming, engineering design, and economics.

2. Discrete Optimization: Discrete optimization involves finding the best solution within a finite set of possible options. It deals with variables that can only take on discrete values and is often used in areas such as combinatorial optimization, network optimization, and scheduling problems.

3. Linear Programming: Linear programming is a method used to optimize linear objective functions subject to linear equality and inequality constraints. It is widely used in operations research, supply chain management, and resource allocation problems.

4. Nonlinear Programming: Nonlinear programming deals with optimizing nonlinear objective functions subject to nonlinear constraints. It is used when the relationships between variables are not strictly linear and is commonly employed in engineering, finance, and data analysis.

5. Integer Programming: Integer programming is a type of optimization that deals with discrete decision variables, where the solutions must be integers. It is widely used in resource allocation, facility location, and production planning problems.

6. Genetic Algorithms: Genetic algorithms are optimization methods inspired by the process of natural selection. They use the principles of mutation, crossover, and selection to find optimal solutions. Genetic algorithms are often used in machine learning, artificial intelligence, and optimization problems with a large search space.

7. Simulated Annealing: Simulated annealing is a stochastic optimization algorithm inspired by the annealing process in metallurgy. It starts from an initial solution and iteratively explores the search space by allowing occasional uphill moves. It is commonly used in optimization problems where the objective function has multiple local optima.

8. Gradient Descent: Gradient descent is an iterative optimization algorithm used to find the minimum of a function. It uses the knowledge of the function’s gradient to iteratively update the solution in the direction of steepest descent. Gradient descent is widely used in machine learning and deep learning for parameter optimization.

9. Heuristics: Heuristics are optimization methods that prioritize efficiency over guaranteed optimality. They use rules of thumb, approximation algorithms, or problem-specific knowledge to find good solutions in a reasonable amount of time. Heuristics are commonly used in complex optimization problems where finding an optimal solution is computationally infeasible.

These are just a few examples of the types and methods of optimization. The choice of optimization approach depends on the specific problem at hand, the nature of the constraints and objectives involved, and the available computational resources.

Applications of optimization in various fields

Optimization is an important concept that finds applications in various fields. Here are a few examples of how optimization is utilized in different disciplines:

1. Engineering: Optimization is used in engineering for designing and improving systems, structures, and processes. It helps engineers find the best configuration or set of parameters that maximize performance or minimize costs. For example, optimization is used in designing efficient transportation networks, energy systems, and manufacturing processes.

2. Economics: Optimization plays a crucial role in economics, particularly in the field of mathematical economics. It is used to model and solve problems related to resource allocation, production planning, portfolio optimization, and market equilibrium. Optimization techniques help economists analyze complex systems and make optimal decisions for economic growth and efficiency.

3. Operations Research: Optimization is the foundation of operations research, a discipline that aims to improve decision-making and efficiency in organizations. It is used to solve complex problems in supply chain management, logistics, scheduling, and resource allocation. Operations researchers employ optimization models and algorithms to find optimal solutions that maximize productivity and minimize costs.

4. Finance: Optimization techniques are widely used in financial decision-making. They help investors and portfolio managers optimize their investment strategies and maximize their returns while managing risks. Portfolio optimization, asset allocation, and risk management are areas where optimization plays a vital role in finance.

5. Data Science and Machine Learning: Optimization algorithms are essential in data science and machine learning. They are used to train models, optimize parameters, and make predictions. Techniques like linear programming, genetic algorithms, and gradient descent are employed to find optimal solutions to problems such as regression, classification, and clustering.

6. Healthcare: Optimization has applications in healthcare, such as optimizing resource allocation in hospitals, designing efficient healthcare delivery systems, and scheduling appointments and surgeries to minimize waiting times. It can also be used in personalized medicine to optimize treatment plans based on individual patient characteristics.

7. Transportation and Logistics: Optimization is key in transportation and logistics to optimize routing, scheduling, and inventory management. It helps companies maximize efficiency, reduce costs, and improve delivery times. Transport logistics, vehicle routing, and warehouse optimization are areas where optimization techniques are widely used.

These are just a few examples illustrating the wide-ranging applications of optimization in various fields. Optimization plays a crucial role in enhancing efficiency, productivity, and decision-making, making it a valuable tool across numerous disciplines.

Challenges and future directions in optimization research

Optimization research faces various challenges and also indicates potential future directions. Some key challenges in optimization research include:

1. Complex Problem Domains: Optimization problems can become increasingly complex, involving a large number of variables and constraints. Real-world scenarios often have multiple conflicting objectives which need to be simultaneously optimized. Dealing with such complexity requires advanced optimization techniques.

2. Scalability: As problem sizes grow, optimization algorithms need to scale efficiently to handle large amounts of data and computational requirements. Developing algorithms that can effectively handle large-scale optimization problems is a challenge.

3. Uncertainty and Dynamic Environments: Many optimization problems exist in uncertain and dynamically changing environments. Factors such as unpredictable inputs or changing constraints make traditional optimization techniques less effective. Research is needed to develop algorithms that can adapt and optimize solutions in such environments.

4. Computational Efficiency: Optimization algorithms often need to find optimal or near-optimal solutions within reasonable time frames. Enhancing the computational efficiency of optimization algorithms is an ongoing challenge, especially for highly complex problems.

5. Continuous versus Discrete Optimization: Optimization problems can be categorized as either continuous or discrete, depending on the nature of variables. Developing algorithms that efficiently handle both continuous and discrete optimization problems is important for addressing a wide range of real-world challenges.

Potential future directions in optimization research include:

1. Multi-objective Optimization: Many real-world problems involve optimizing multiple objectives simultaneously. Future research can focus on developing algorithms that efficiently handle multi-objective optimization problems, providing a trade-off between conflicting objectives.

2. Metaheuristic Algorithms: Metaheuristic algorithms, such as genetic algorithms and simulated annealing, have proven effective in optimization. Further research can explore the adaptation and combination of different metaheuristic techniques to enhance their performance and applicability.

3. Hybrid Optimization Algorithms: Hybrid algorithms, combining multiple optimization techniques, can leverage the strengths of different algorithms to solve complex optimization problems. Future research can focus on developing hybrid approaches that provide improved performance and robustness.

4. Robust Optimization: Considering uncertainty and variability in optimization problems is essential for real-world applicability. Future research can focus on developing robust optimization techniques that can handle uncertain or changing environments effectively.

5. Optimization in Machine Learning: Optimization plays a crucial role in machine learning algorithms. Future research can explore optimization techniques specifically tailored for training deep neural networks and other machine learning models to improve their performance and efficiency.

Overall, the challenges and future directions in optimization research revolve around addressing complexity, scalability, uncertainty, computational efficiency, and exploring new areas of application. By overcoming these challenges and exploring new avenues, optimization research can continue to advance and contribute to solving real-world problems.

Topics related to Optimization

Introduction to Optimization Techniques – YouTube

Introduction to Optimization Techniques – YouTube

Formulation |Part 1| Linear Programming Problem – YouTube

Formulation |Part 1| Linear Programming Problem – YouTube

Optimization Problems – Calculus – YouTube

Optimization Problems – Calculus – YouTube

Mod-01 Lec-01 Optimization – Introduction – YouTube

Mod-01 Lec-01 Optimization – Introduction – YouTube

Mod-01 Lec-02 Formulation of LPP – YouTube

Mod-01 Lec-02 Formulation of LPP – YouTube

Lecture 01: Introduction and History of Optimization – YouTube

Lecture 01: Introduction and History of Optimization – YouTube

Lecture 02: Basics of Linear Algebra – YouTube

Lecture 02: Basics of Linear Algebra – YouTube

Lecture 1 | Convex Optimization I (Stanford) – YouTube

Lecture 1 | Convex Optimization I (Stanford) – YouTube

Lecture 2 | Convex Optimization I (Stanford) – YouTube

Lecture 2 | Convex Optimization I (Stanford) – YouTube

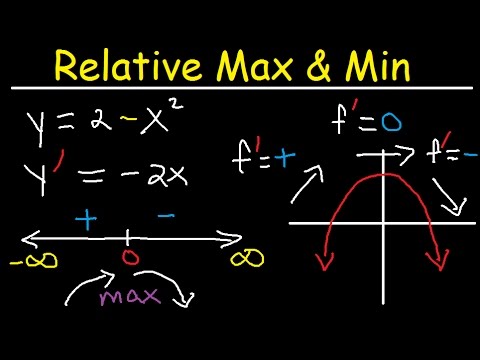

Relative Extrema, Local Maximum and Minimum, First Derivative Test, Critical Points- Calculus – YouTube

Relative Extrema, Local Maximum and Minimum, First Derivative Test, Critical Points- Calculus – YouTube

Peter Scholze is a distinguished German mathematician born on December 11, 1987. Widely recognized for his profound contributions to arithmetic algebraic geometry, Scholze gained international acclaim for his work on perfectoid spaces. This innovative work has significantly impacted the field of mathematics, particularly in the study of arithmetic geometry. He is a leading figure in the mathematical community.