Definition of Control Theory in mathematics

Control theory is a branch of mathematics that deals with the analysis and design of systems that are governed by certain rules or principles. It involves studying and understanding how a system behaves and developing methods to control or manipulate its behavior to achieve desired outcomes.

In control theory, a system refers to a set of interactive components or elements that interact with each other based on certain rules or laws. This can include mechanical systems, electrical circuits, chemical processes, biological systems, and many other real-world phenomena.

The main goal of control theory is to design controllers that can influence or regulate the behavior of the system in order to achieve specific objectives. These objectives could include stability, tracking desired outputs, minimizing errors, optimizing performance, or achieving desired setpoints.

Control theory utilizes various mathematical concepts and tools, such as differential equations, linear algebra, calculus, optimization techniques, and feedback principles. It involves modeling the system and analyzing its dynamics, stability, controllability, and observability. Controllers are designed based on these analyses, and their performance is evaluated through simulation or practical implementation.

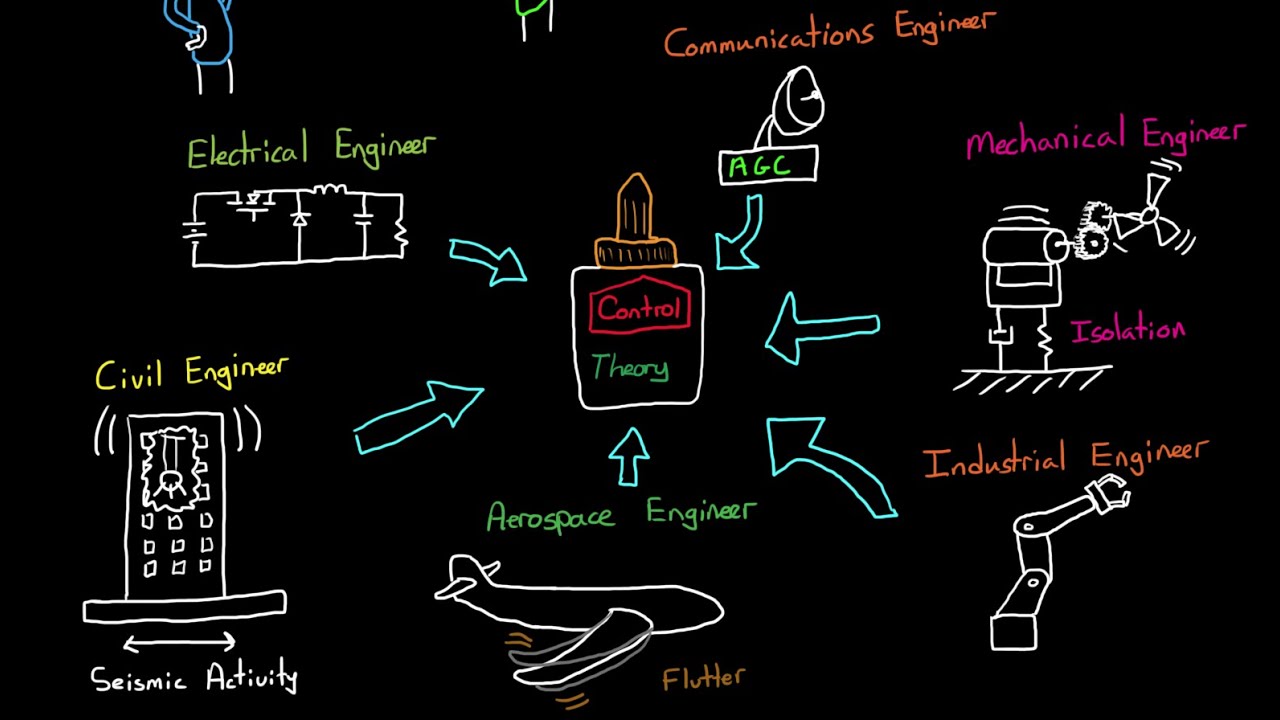

Overall, control theory provides a framework for understanding and manipulating the behavior of dynamic systems, allowing engineers and scientists to design and improve systems in a wide range of fields, including robotics, aerospace, manufacturing, power systems, transportation, and many others.

Principles and concepts of Control Theory

Control theory is a branch of engineering and mathematics that deals with the control of dynamic systems. The main purpose of control theory is to design and analyze systems that can maintain a desired behavior or performance in the presence of disturbances and uncertainties.

There are several key principles and concepts in control theory:

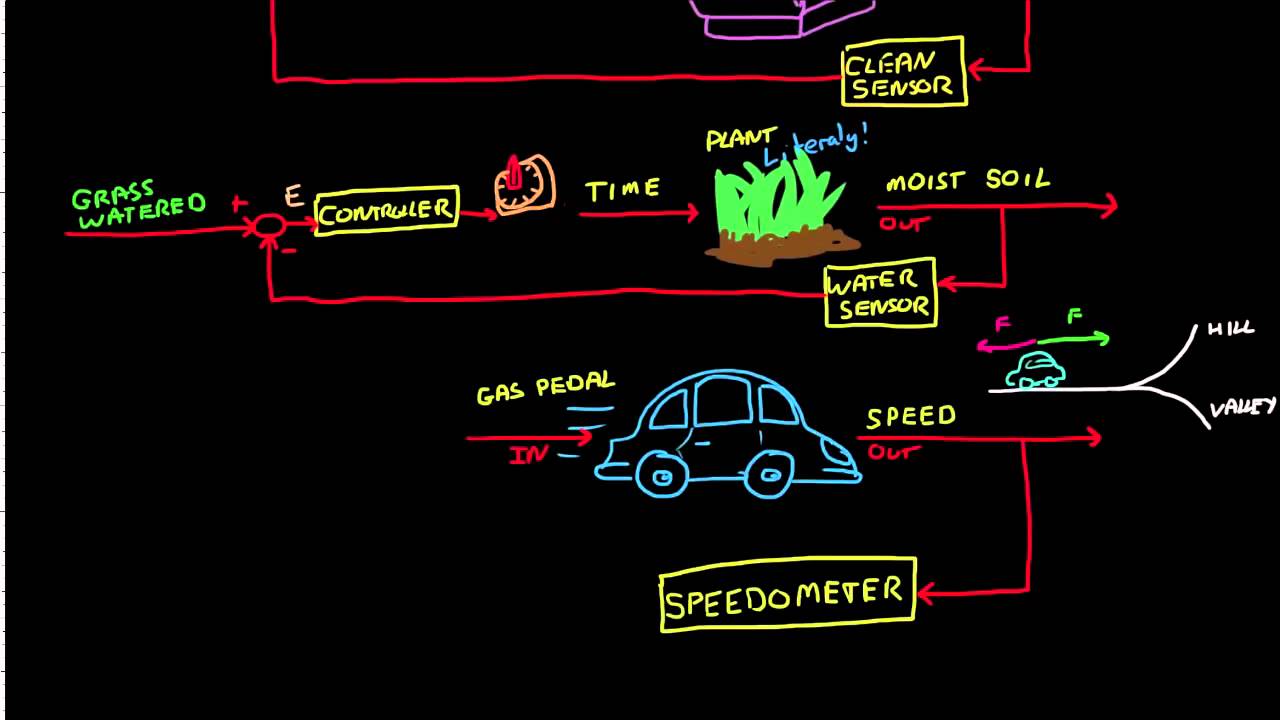

1. Feedback: Feedback is a fundamental concept in control theory. It involves measuring the output of a system and using that information to adjust the system’s inputs or parameters. Feedback allows for the correction of errors or deviations from the desired behavior.

2. Control system: A control system is a collection of components and processes that work together to achieve a desired output or response. It typically consists of sensors, controllers, actuators, and a plant or process being controlled. The control system receives feedback from the plant, processes it, and generates control signals to adjust the plant’s inputs.

3. Open-loop and closed-loop control: In an open-loop control system, the control signals are determined solely based on the desired output or reference input. There is no feedback involved, which means that disturbances or uncertainties cannot be effectively mitigated. In contrast, closed-loop control systems use feedback to adjust the control signals and maintain the desired output despite disturbances or uncertainties.

4. Control objectives: Control theory aims to achieve specific control objectives, such as stability, tracking, disturbance rejection, and optimality. Stability refers to the system’s ability to reach and maintain a desired state over time. Tracking involves making the system follow a reference input. Disturbance rejection focuses on minimizing the impact of disturbances on the system’s output. Optimality aims to find control strategies that minimize a cost function related to the system’s performance.

5. System modeling: Control theory relies on mathematical models to describe the behavior of the system being controlled. These models can be based on physics-based principles or data-driven techniques. They are typically represented in the form of differential equations or transfer functions, which capture the relationship between the input and output variables of the system.

6. Controller design: Control theory involves designing controllers that generate appropriate control signals based on the system’s feedback. Various control techniques and algorithms are used for controller design, including proportional-integral-derivative (PID) control, state-space control, and optimal control methods.

7. Stability analysis: Stability analysis is an important aspect of control theory. It involves investigating the stability properties of the system to ensure that it does not exhibit unstable or oscillatory behavior. Stability analysis techniques, such as Lyapunov stability theory and Bode stability criteria, are used to assess the stability of control systems.

Control theory has applications in various fields, including aerospace engineering, robotics, process control, and economic systems. It provides a theoretical framework for designing and analyzing control systems to achieve desired performance and behavior.

Applications of Control Theory in mathematics

Control theory is a branch of mathematics that deals with modeling, analysis, and design of systems that can be controlled or influenced to behave in a desired manner. While control theory is widely applied in engineering and physics, it also has applications within mathematics itself. Here are some areas where control theory is used in mathematics:

1. Optimal control: Control theory is utilized to solve optimization problems, where the goal is to find the best control strategy that minimizes a cost function while satisfying certain constraints. Optimal control theory has applications in areas such as calculus of variations, partial differential equations, and optimal transport theory.

2. Stabilization of dynamical systems: Control theory provides techniques for stabilizing dynamical systems, which are systems that evolve over time according to a set of equations. In mathematics, these stabilization techniques are applied to various systems such as differential equations, difference equations, functional equations, and partial differential equations.

3. Stability analysis: Control theory is used to analyze the stability of mathematical models and systems. By studying the stability properties of a system, one can determine its long-term behavior and predict its response to disturbances. Stability analysis in mathematics is crucial for understanding the behavior of differential equations, difference equations, and other mathematical models.

4. Feedback control in mathematical models: Feedback control is a fundamental concept in control theory, where the output of a system is measured and used to adjust the input in order to achieve a desired behavior. In mathematics, feedback control techniques can be applied to various models and systems, such as differential equations, dynamical systems, and graph theory, to improve their performance or stability.

5. Approximation theory: Control theory provides tools for approximating complex systems with simpler models, which can be easier to analyze or control. In mathematics, approximation theory is used to construct simpler models that capture the essential behavior of more complex systems. Control theory techniques, such as model reduction and system identification, are employed to obtain accurate approximations.

These applications of control theory in mathematics highlight its significance in analyzing and influencing the behavior of mathematical models and systems. By applying control theory techniques, mathematicians can gain insights into the stability, optimization, and control of various mathematical systems.

Control Theory in relation to other branches of mathematics

Control theory is a branch of mathematics that deals with the analysis and design of systems with inputs and outputs, aiming to control or regulate the behavior of these systems. It is closely related to other branches of mathematics, including:

1. Differential Equations: Control theory heavily relies on the theory of ordinary and partial differential equations. These equations are used to model the dynamics of the system being controlled, allowing control engineers to understand and predict its behavior.

2. Linear Algebra: Many control system models involve linear dynamics, and linear algebra is used to analyze and solve these systems. This includes methods such as matrix operations, eigenvalues, eigenvectors, and solving linear equations.

3. Optimization Theory: Control theory often involves optimization problems, where the goal is to find control strategies that optimize certain performance criteria. Optimization theory provides tools and techniques to solve these problems and find optimal control solutions.

4. Probability Theory and Stochastic Processes: In some control systems, the dynamics or disturbances are subject to randomness. Probability theory and stochastic processes are used to model and analyze such systems, enabling control engineers to make decisions based on probabilistic information.

5. Numerical Methods: Control theory often requires the use of numerical methods and algorithms to analyze, simulate, and solve control system models. Techniques from numerical analysis, such as finite difference methods or numerical integration, are used to approximate the behavior of the system and compute control strategies.

6. Graph Theory: Control theory can also be applied to networked systems and multi-agent systems, where graph theory is used to model and analyze the interactions between different components or agents. Graph algorithms and topological properties are utilized to study the controllability and observability of such systems.

In summary, control theory draws on various branches of mathematics to provide a rigorous framework for understanding and controlling dynamic systems. By leveraging techniques from differential equations, linear algebra, optimization theory, probability theory, numerical methods, and graph theory, control engineers can design effective control strategies for a wide range of applications.

Limitations and future developments in Control Theory

Control theory is a branch of engineering and mathematics that deals with the principles and methods for controlling systems, particularly dynamic systems. While control theory has made significant contributions to various fields, it also has certain limitations and areas for future developments.

1. Complexity: Control theory may struggle to handle complex systems due to their intricate dynamics and high dimensionality. Developing control algorithms that can effectively control large-scale or nonlinear systems remains a challenge.

2. Uncertainty: Many real-world systems are subject to uncertainties, such as disturbances, modeling errors, and parameter variations. Conventional control theory does not explicitly address uncertainty, and robust control methodologies are limited in the level of uncertainty they can handle.

3. Computational demands: Some control algorithms, particularly optimal or model predictive control, can be computationally expensive, limiting real-time implementation. Efficient algorithms are required to reduce the computational burden while maintaining acceptable control performance.

4. Lack of adaptability: Control theory traditionally assumes that the system model is known and accurate. However, in practice, system models are often subject to change or may be difficult to determine precisely. Developing adaptive control algorithms that can adjust to varying system dynamics is an important future direction.

5. Integration with machine learning: Control theory can benefit from advancements in machine learning and data-driven modeling. Integrating control theory with machine learning techniques, such as reinforcement learning and neural networks, could enhance control performance and enable control of complex, unknown systems.

6. Networked control systems: With the rise of distributed systems and networked control, control theory needs to address the challenges of communication delays, packet losses, and limited bandwidth. Developing control algorithms that can handle these issues and ensure stability and performance is an active area of research.

7. Human factors: As control systems become more prevalent in human-centric applications, such as autonomous vehicles or medical devices, considering human factors becomes crucial. Incorporating human behavior and preferences into control algorithms to achieve human-centric control is an emerging field of research.

Future developments in control theory are focused on addressing these limitations:

1. Nonlinear and robust control: Developing control methods that can handle nonlinear and uncertain systems more effectively, such as adaptive control and robust control frameworks with guaranteed stability and performance.

2. Machine learning for control: Incorporating machine learning techniques, such as reinforcement learning and neural networks, into control algorithms to improve control performance, handle uncertainties, and adapt to changing system dynamics.

3. Data-driven control: Utilizing data-driven modeling and identification techniques, such as system identification and machine learning, to develop control algorithms based on real-time data instead of relying on precise system models.

4. Networked and distributed control: Developing control algorithms that can handle communication delays, limited bandwidth, and packet losses in networked control systems, enabling control of distributed and large-scale systems.

5. Human-centric control: Integrating human factors into control algorithms to enhance human-machine interaction, considering human behavior, preferences, and safety aspects in control design.

In summary, control theory has important limitations, such as complexity and uncertainty handling, computational demands, and lack of adaptability. Future developments in control theory aim to address these challenges through advancements in nonlinear and robust control, machine learning for control, data-driven control, networked and distributed control, and human-centric control.

Topics related to Control Theory

Why Learn Control Theory – YouTube

Why Learn Control Theory – YouTube

Control Systems Lectures – Closed Loop Control – YouTube

Control Systems Lectures – Closed Loop Control – YouTube

Everything You Need to Know About Control Theory – YouTube

Everything You Need to Know About Control Theory – YouTube

Incentive Ad Desktop – YouTube

Incentive Ad Desktop – YouTube

Control Bootcamp: Overview – YouTube

Control Bootcamp: Overview – YouTube

Linear Systems [Control Bootcamp] – YouTube

Linear Systems [Control Bootcamp] – YouTube

Introduction to Control Theory – YouTube

Introduction to Control Theory – YouTube

Introduction to the Laplace Transform – YouTube

Introduction to the Laplace Transform – YouTube

Introduction to Control Systems – YouTube

Introduction to Control Systems – YouTube

System Response Characteristics – YouTube

System Response Characteristics – YouTube

Peter Scholze is a distinguished German mathematician born on December 11, 1987. Widely recognized for his profound contributions to arithmetic algebraic geometry, Scholze gained international acclaim for his work on perfectoid spaces. This innovative work has significantly impacted the field of mathematics, particularly in the study of arithmetic geometry. He is a leading figure in the mathematical community.