Definition of Eigenvector

An eigenvector is a non-zero vector that only changes in length (scalar factor) when multiplied by a specific matrix. In other words, when an eigenvector is multiplied by a matrix, the resulting vector is parallel to the original eigenvector. The scalar factor by which the original eigenvector changes in length is known as the eigenvalue corresponding to that eigenvector. Eigenvectors and eigenvalues are fundamental concepts in linear algebra and are useful in various mathematical and scientific applications, such as solving systems of linear equations and analyzing the behavior of dynamical systems.

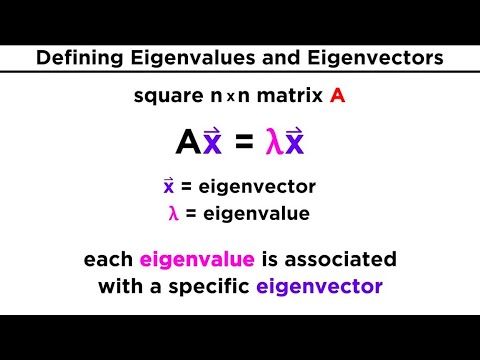

Eigenvectors and Eigenvalues

In linear algebra, eigenvectors and eigenvalues are concepts used to analyze the behavior of linear transformations and matrices.

An eigenvector of a linear transformation or matrix is a nonzero vector that remains in the same direction after the transformation. In other words, it is only scaled (multiplied) by a scalar value.

More formally, given a linear transformation T or a square matrix A, an eigenvector v is a nonzero vector that satisfies the equation:

T(v) = λv

or

A.v = λv

where λ is a scalar value known as the eigenvalue associated with the eigenvector.

So, when we apply the linear transformation or multiply the matrix A by an eigenvector v, the resulting vector is parallel to v and scaled by a factor of λ.

Finding the eigenvectors and eigenvalues of a linear transformation or matrix is an important task in various applications, such as solving systems of linear equations, analyzing stability in dynamic systems, and understanding the behavior of quantum systems.

Eigenvectors and eigenvalues have several useful properties and applications. They can help decompose a matrix into its diagonalized form, simplify the analysis of linear transformations, and aid in solving differential equations. They also play a crucial role in principal component analysis (PCA), a statistical technique used for data dimensionality reduction and feature extraction.

In summary, eigenvectors are vectors that remain in the same direction after a linear transformation, while eigenvalues are the scalar values by which these eigenvectors are scaled. They are fundamental concepts in linear algebra and have various applications in mathematics, physics, and data analysis.

Properties of Eigenvectors

Eigenvectors are a fundamental concept in linear algebra and have several important properties.

1. Non-zero vectors: Eigenvectors are non-zero vectors, meaning they have a length or magnitude greater than zero. This is because the zero vector cannot be an eigenvector.

2. Linearity: Eigenvectors are closed under scalar multiplication. This means that if v is an eigenvector of a matrix A, then any scalar multiple of v (kv, where k is a scalar) is also an eigenvector.

3. Direction: Eigenvectors only change in magnitude (length) when multiplied by a matrix. They retain their direction or orientation. This means that if v is an eigenvector of a matrix A, then Av is a scalar multiple of v, indicating that the direction of v remains the same.

4. Eigenvalues: Eigenvectors correspond to eigenvalues, which are the scalars by which the vector is scaled when multiplied by a matrix. Each eigenvector is associated with a unique eigenvalue.

5. Orthogonality: Eigenvectors corresponding to distinct eigenvalues are orthogonal (perpendicular) to each other. This property is known as the eigenvector orthogonality property and is useful in applications such as diagonalization of matrices.

6. Spanning space: Eigenvectors can form a basis for the vector space they belong to. This means that any vector in that space can be expressed as a linear combination of eigenvectors.

7. Diagonalization: If a matrix has a complete set of linearly independent eigenvectors, it can be diagonalized. This means that it can be transformed into a diagonal matrix, where the entries off the diagonal are zero. Diagonalization is useful for simplifying calculations involving matrices and for understanding their properties.

Overall, eigenvectors play a crucial role in linear algebra and have various applications in fields such as physics, computer science, and engineering.

Applications of Eigenvectors

Eigenvectors are widely used in different fields for various applications. Some of the key applications of eigenvectors are:

1. Principal Component Analysis (PCA): Eigenvectors are extensively used in PCA to reduce the dimensions of a dataset. By finding the eigenvectors of the covariance matrix, PCA helps to identify the most significant directions in the data and projects the data onto those directions, resulting in a lower-dimensional representation that retains the most important information.

2. Image Compression: Eigenvectors are used in image compression techniques such as Singular Value Decomposition (SVD) and Discrete Cosine Transform (DCT). By representing an image as a linear combination of its eigenvectors, these techniques can achieve high compression ratios while maintaining image quality.

3. Network Analysis: Eigenvectors play a crucial role in network analysis, particularly in the analysis of large-scale networks or graphs. A popular example is the Google PageRank algorithm, which uses eigenvectors to determine the importance or ranking of web pages based on their connectivity patterns within the web graph.

4. Quantum Mechanics: In quantum mechanics, eigenvectors are used to describe the possible states of a physical system. The eigenvectors of a quantum operator represent allowed states of the system, and their corresponding eigenvalues represent the observable quantities associated with those states.

5. Structural Engineering: Eigenvectors are used in structural engineering to analyze the dynamic behavior of structures. For example, in the field of modal analysis, eigenvectors are used to determine the natural frequencies and mode shapes of a structure, which are important for assessing its stability and response to external forces.

6. Machine Learning: Eigenvectors are employed in various machine learning algorithms. For instance, in face recognition systems, eigenvectors are used to represent the principal components of the facial features, enabling accurate recognition and classification of faces.

Overall, eigenvectors have diverse applications across different scientific, engineering, and technological domains, providing valuable insights and solutions to various problems.

Conclusion

In conclusion, eigenvectors play a significant role in linear algebra and have several important applications. They provide a way to understand the behavior of linear transformations and matrices, helping to simplify complex systems and analyze their stability. Eigenvectors are also used in various fields such as data analysis, image processing, and quantum mechanics. By understanding eigenvectors and their corresponding eigenvalues, we can gain valuable insights into the underlying structure and characteristics of a given system. Overall, eigenvectors are a powerful tool in mathematics and have widespread applications in various disciplines.

Topics related to Eigenvector

Finding Eigenvalues and Eigenvectors – YouTube

Finding Eigenvalues and Eigenvectors – YouTube

❖ Finding Eigenvalues and Eigenvectors : 2 x 2 Matrix Example ❖ – YouTube

❖ Finding Eigenvalues and Eigenvectors : 2 x 2 Matrix Example ❖ – YouTube

21. Eigenvalues and Eigenvectors – YouTube

21. Eigenvalues and Eigenvectors – YouTube

Introduction to eigenvalues and eigenvectors | Linear Algebra | Khan Academy – YouTube

Introduction to eigenvalues and eigenvectors | Linear Algebra | Khan Academy – YouTube

The applications of eigenvectors and eigenvalues | That thing you heard in Endgame has other uses – YouTube

The applications of eigenvectors and eigenvalues | That thing you heard in Endgame has other uses – YouTube

🔷15 – Eigenvalues and Eigenvectors of a 3×3 Matrix – YouTube

🔷15 – Eigenvalues and Eigenvectors of a 3×3 Matrix – YouTube

Oxford Linear Algebra: Eigenvalues and Eigenvectors Explained – YouTube

Oxford Linear Algebra: Eigenvalues and Eigenvectors Explained – YouTube

15 – What are Eigenvalues and Eigenvectors? Learn how to find Eigenvalues. – YouTube

15 – What are Eigenvalues and Eigenvectors? Learn how to find Eigenvalues. – YouTube

Eigen values and eigen vectors # Double root # Matrices # Algebra – YouTube

Eigen values and eigen vectors # Double root # Matrices # Algebra – YouTube

22. Diagonalization and Powers of A – YouTube

22. Diagonalization and Powers of A – YouTube

Peter Scholze is a distinguished German mathematician born on December 11, 1987. Widely recognized for his profound contributions to arithmetic algebraic geometry, Scholze gained international acclaim for his work on perfectoid spaces. This innovative work has significantly impacted the field of mathematics, particularly in the study of arithmetic geometry. He is a leading figure in the mathematical community.