Definition of R-squared in mathematics

In mathematics, R-squared (or coefficient of determination) is a statistical measure that indicates how well a regression model fits the observed data. It is a relative measure of how well the dependent variable is explained by the independent variables in the model.

R-squared ranges from 0 to 1, with 0 indicating that the model explains none of the variability of the target variable and 1 indicating that the model explains all of the variability. It is calculated as the squared correlation between the predicted values of the model and the actual values of the target variable. In simple terms, it represents the proportion of the variance in the dependent variable that is predictable from the independent variables in the model.

R-squared is often used in statistical analysis and regression modeling to evaluate the goodness-of-fit of the model, or to compare different models. However, it is important to note that R-squared does not determine the causality or reliability of the model, and its interpretation can vary depending on the context and the specific variables involved.

Calculation of R-squared

R-squared, also known as the coefficient of determination, is a statistical measure that represents the proportion of the variance in the dependent variable (y) explained by the independent variable(s) (x).

To calculate R-squared, you can use the following steps:

1. Calculate the average value of the dependent variable, which we’ll call ȳ.

2. Calculate the difference between each observed value of the dependent variable (y) and the average value (ȳ). These differences are called residuals (e).

3. Square each residual (e) to get the squared residuals.

4. Calculate the sum of squared residuals (SSR), which is the sum of the squared residuals.

5. Calculate the total sum of squares (SST), which is the sum of the squared differences between each observed value of the dependent variable (y) and the average value (ȳ).

6. Calculate the regression sum of squares (SSR), which is the sum of the squared differences between each predicted value of the dependent variable (ŷ) and the average value (ȳ).

7. Calculate R-squared using the formula:

R-squared = 1 – (SSR/SST)

R-squared ranges from 0 to 1, where a value of 0 indicates that the independent variable(s) explain none of the variance in the dependent variable, and a value of 1 indicates that the independent variable(s) explain all of the variance.

It’s important to note that R-squared is not a measure of the goodness of fit, but rather a measure of the proportion of variance explained. It does not indicate the accuracy or precision of the regression model.

Interpretation of R-squared

The R-squared value, also known as the coefficient of determination, is a statistical measure that indicates the proportion of variance in the dependent variable that is predictable from the independent variable(s). It ranges from 0 to 1.

An R-squared value of 0 suggests that the independent variable(s) have no explanatory power in predicting the dependent variable. This means that all the variability in the dependent variable is due to other factors or random chance.

On the other hand, an R-squared value of 1 indicates that the independent variable(s) completely explain the variability in the dependent variable. In this case, there is a perfect linear relationship between the independent and dependent variables, and any change in the independent variable(s) will result in a corresponding change in the dependent variable.

In practice, R-squared values between 0 and 1 are more common. A higher R-squared value indicates that a larger portion of the variability in the dependent variable can be attributed to the independent variable(s). It is often used as a measure of how well a regression model fits the data, with higher values indicating better model fit. However, it is important to note that R-squared alone does not determine the validity or usefulness of a model, and it should be interpreted in conjunction with other statistical measures and subject knowledge.

Limitations of R-squared

There are several limitations associated with the use of R-squared as a measure of model fit:

1. R-squared is sensitive to the number of predictors in the model: R-squared increases with the addition of more predictors, even if those predictors do not have any meaningful relationship with the outcome variable. This can lead to the inclusion of irrelevant predictors in the model, thereby inflating the R-squared value.

2. R-squared does not indicate whether the predictors in the model are statistically significant: R-squared only measures how well the predictors collectively explain the variation in the outcome variable, but it does not indicate whether individual predictors have a significant effect or whether they are spurious.

3. R-squared cannot determine causality: R-squared can show that there is a strong relationship between the predictors and the outcome variable, but it cannot establish a cause-and-effect relationship. There may be other unmeasured factors or variables that are driving the observed relationship.

4. R-squared does not provide information about the goodness of fit for out-of-sample data: R-squared is calculated based on the observed data used to fit the model. It does not provide any insights into how well the model will perform when applied to new, unseen data.

5. R-squared can be influenced by outliers: Outliers in the data can have a significant impact on the R-squared value. In some cases, the inclusion or exclusion of outliers can greatly alter the R-squared value, making it less reliable as a measure of model fit.

6. R-squared does not indicate the predictive power of the model: R-squared measures how well the model explains the observed variation in the outcome variable, but it does not necessarily reflect the model’s ability to make accurate predictions on new data.

7. R-squared can be misleading in the presence of multicollinearity: When there is a high degree of multicollinearity among the predictors in the model, R-squared may be inflated, leading to an overestimation of the model’s predictive power and the importance of individual predictors.

In summary, while R-squared is a commonly used measure of model fit, it should be interpreted with caution due to its limitations. It is important to consider other measures and diagnostic tools alongside R-squared to obtain a comprehensive understanding of the model’s performance and validity.

Applications of R-squared in mathematics

R-squared is a statistical measure used to assess the goodness of fit of a regression model in the field of statistics. However, its applications are not confined to just statistics. R-squared can also be applied in certain areas of mathematics, particularly in the context of linear regression and data analysis. Here are a few applications of R-squared in mathematics:

1. Linear regression analysis: R-squared is commonly used as a measure of how well a linear regression model fits the given data points. It quantifies the proportion of the variability in the dependent variable that can be explained by the independent variables in the model. A higher R-squared value indicates a better fit, indicating that the relationship between the variables is stronger.

2. Model comparison: R-squared can be used to compare different regression models with each other. By comparing the R-squared values of several models, one can determine which model provides the best fit to the data. This helps in selecting the most appropriate model for making predictions or in understanding the relationship between variables.

3. Evaluating mathematical models: R-squared can also be used to evaluate mathematical models that are not specifically regression models. For example, if a mathematical model is fitted to experimental data, the R-squared value can provide an indication of how well the model represents the observed data. A high R-squared value suggests that the model is a good representation of the data, while a low value indicates poor fit.

4. Assessing linearity: R-squared can be utilized to assess the linearity of a relationship between variables. In certain mathematical contexts, such as curve fitting or optimization, it can be crucial to determine whether a linear approximation is suitable. By calculating the R-squared value, one can ascertain the extent to which the relationship is linear, and if a linear model is appropriate.

5. Hypothesis testing: R-squared can also be incorporated into hypothesis testing in mathematics. When testing the significance of a regression model or examining the effect of certain variables, the R-squared value is often utilized. In this case, the R-squared value serves as an indicator of whether the model explains a significant amount of the variation in the dependent variable.

Overall, R-squared has various applications within the field of mathematics, particularly in the realm of regression analysis, model evaluation, and assessing the linearity of mathematical relationships.

Topics related to R-squared

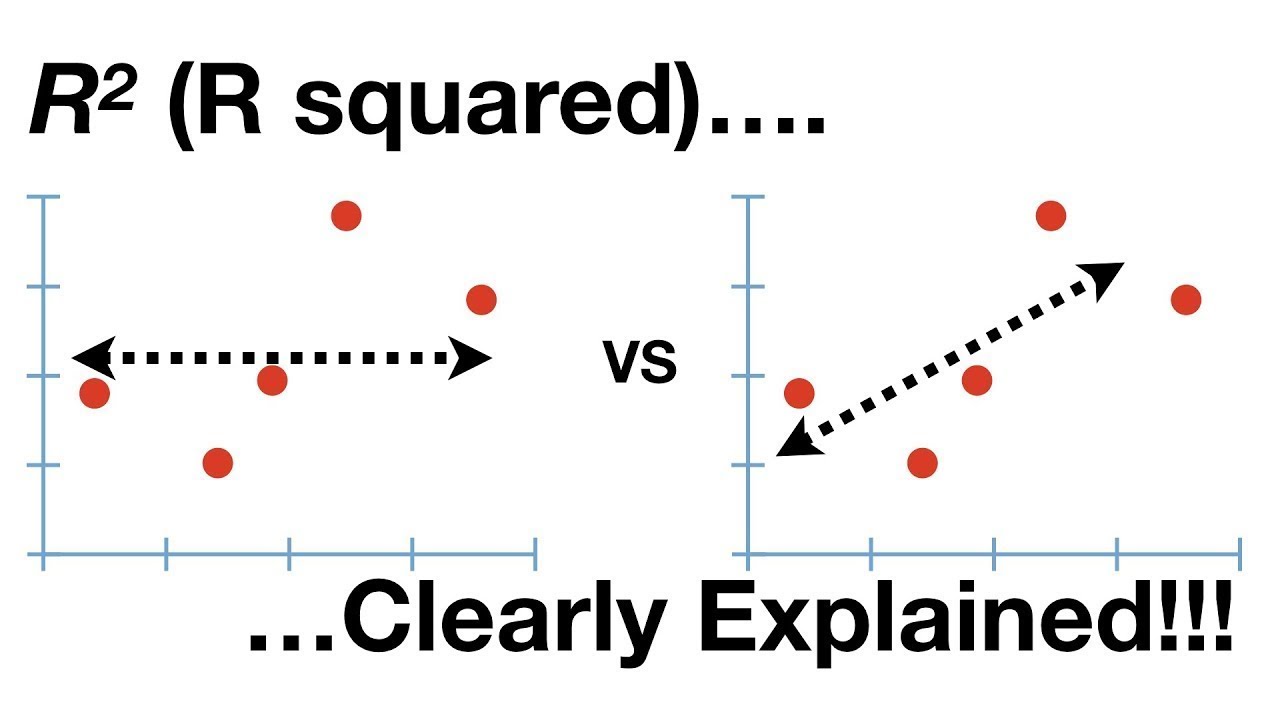

R-squared, Clearly Explained!!! – YouTube

R-squared, Clearly Explained!!! – YouTube

Math Made Easy by StudyPug! F3.0.0 – YouTube

Math Made Easy by StudyPug! F3.0.0 – YouTube

CSIR NET 2023 #csirnet #csirnetjrf https://www.rsquaredmathematics.in/t-10-test-series-for-csir-net – YouTube

CSIR NET 2023 #csirnet #csirnetjrf https://www.rsquaredmathematics.in/t-10-test-series-for-csir-net – YouTube

CSIR NET 2023 December 2023 official Notification Out. How to fill online form for CSIR NET. – YouTube

CSIR NET 2023 December 2023 official Notification Out. How to fill online form for CSIR NET. – YouTube

Important Topics in Linear Algebra for CSIR NET | CSIR NET 2023 | Complete Preparation Assistance – YouTube

Important Topics in Linear Algebra for CSIR NET | CSIR NET 2023 | Complete Preparation Assistance – YouTube

Eigen Values Eigen Vectors | Linear Algebra Lecture 13 – YouTube

Eigen Values Eigen Vectors | Linear Algebra Lecture 13 – YouTube

Introduction to Group Theory | Lecture – 01 | Abstract Algebra – YouTube

Introduction to Group Theory | Lecture – 01 | Abstract Algebra – YouTube

How to Crack CSIR NET 2023 | Test Series | How to attend Quiz – Task of the day – YouTube

How to Crack CSIR NET 2023 | Test Series | How to attend Quiz – Task of the day – YouTube

CSIR NET 2023 | What is Next | Mission JRF | Week – IV | Complete Preparation Assistance – YouTube

CSIR NET 2023 | What is Next | Mission JRF | Week – IV | Complete Preparation Assistance – YouTube

Important Topics Complex Analysis | CSIR NET 2023 | Complete Syllabus Discussion | Week – 3 – YouTube

Important Topics Complex Analysis | CSIR NET 2023 | Complete Syllabus Discussion | Week – 3 – YouTube

Peter Scholze is a distinguished German mathematician born on December 11, 1987. Widely recognized for his profound contributions to arithmetic algebraic geometry, Scholze gained international acclaim for his work on perfectoid spaces. This innovative work has significantly impacted the field of mathematics, particularly in the study of arithmetic geometry. He is a leading figure in the mathematical community.