Introduction to Bayesian Statistics

Bayesian statistics is a branch of statistics that incorporates prior knowledge or beliefs into the analysis of data. Named after the 18th-century mathematician Thomas Bayes, this approach allows for updating the probability of an event or hypothesis based on new evidence or data.

Unlike classical statistics, which relies heavily on frequentist methods and hypothesis testing, Bayesian statistics uses probability distributions to express uncertainty. Instead of making strict yes-or-no decisions, Bayesian analysis provides a range of probabilities, taking into account the prior beliefs about the event being analyzed.

Central to Bayesian statistics is the Bayes’ theorem, which states that the probability of an event given certain evidence is equal to the prior probability of the event multiplied by the likelihood of the evidence given the event, divided by the overall probability of the evidence. This theorem allows for the updating of beliefs when new information becomes available.

Bayesian statistics is particularly useful when dealing with small sample sizes or complex models, as it integrates expert knowledge and subjective opinions to refine and improve the analysis. It is also valuable for decision-making in scenarios where uncertainty plays a significant role, as it provides a framework for assessing risks and making informed choices.

In recent years, Bayesian statistics has gained popularity due to advancements in computing power and the availability of sophisticated software that can handle complex Bayesian models. This has made it more accessible to researchers in various fields, including medicine, economics, psychology, and machine learning.

Overall, Bayesian statistics offers a flexible and powerful approach to data analysis, allowing for the incorporation of prior knowledge and subjective opinions. By providing a range of probabilities instead of rigid conclusions, it provides a more comprehensive and realistic representation of uncertainty in statistical inference.

Basic Principles of Bayesian Statistics

Bayesian statistics is a branch of statistics that deals with the inference and decision-making process using a probabilistic approach. It is based on the principles of Bayesian inference, which are:

1. Prior Knowledge: Bayesian statistics incorporates the use of prior knowledge or beliefs about the parameters of interest before observing the data. The prior knowledge is expressed in terms of probability distributions.

2. Likelihood: The likelihood function describes the probability of observing the data given the parameters of the model. It quantifies the compatibility between the observed data and the parameter values.

3. Bayes’ Theorem: Bayes’ theorem is the fundamental equation of Bayesian statistics. It updates our prior beliefs based on the observed data and gives us the posterior distribution, which is the updated probability distribution of the parameters given the data. The theorem states that:

Posterior ∝ Likelihood × Prior

Posterior = P(Parameters|Data)

Likelihood = P(Data|Parameters)

Prior = P(Parameters)

4. Posterior Inference: Once we have the posterior distribution, we can compute various statistics, such as the mean, median, or credible intervals, to make inferences about the parameters.

5. Bayesian Decision Theory: Bayesian statistics provides a framework for decision-making by integrating the posterior distribution with utility or loss functions. This allows us to make decisions that maximize expected utility or minimize expected loss.

6. Updateability: Bayesian statistics is inherently updateable. As new data becomes available, we can update our posterior distribution by applying Bayes’ theorem again. This allows for iterative learning and refinement of our beliefs as more information is acquired.

These basic principles of Bayesian statistics offer a flexible and coherent framework for statistical inference and decision-making, particularly in situations where prior knowledge is available or can be explicitly incorporated into the analysis.

Bayesian Inference

Bayesian inference is a statistical framework that combines prior knowledge or beliefs with observed data to update and infer the probability of events or hypotheses. It is based on Bayes’ theorem, which calculates the likelihood of an event given some prior information.

In Bayesian inference, we start with a prior probability distribution that represents our initial beliefs about the event of interest. As we collect new data, we update this distribution using Bayes’ theorem to obtain a posterior probability distribution. The posterior distribution incorporates both the prior information and the observed data, providing an updated and more accurate representation of our beliefs.

Bayesian statistics, on the other hand, is a branch of statistics that embraces the principles of Bayesian inference. It provides a comprehensive framework for analyzing and modeling data, with a focus on using prior knowledge and updating beliefs through the use of probability distributions.

One key advantage of Bayesian inference and Bayesian statistics is the flexibility in incorporating prior knowledge into the analysis. This prior information can come from previous studies, expert opinion, or other external sources. By considering prior beliefs alongside the observed data, Bayesian methods can provide more nuanced and personalized insights.

However, Bayesian inference also requires making assumptions about the form and parameters of the prior distribution, which can be challenging. Additionally, computing exact solutions in Bayesian inference can be complex, leading to the development of various approximation techniques such as Markov Chain Monte Carlo (MCMC) methods.

Overall, Bayesian inference and Bayesian statistics provide a powerful framework for making statistically informed decisions by incorporating prior knowledge and updating beliefs based on observed data.

Benefits and Applications of Bayesian Statistics

Bayesian statistics is a powerful framework that allows for the inclusion of prior knowledge and beliefs into statistical analysis. It is based on Bayes’ theorem, which updates prior beliefs with observed data to obtain posterior beliefs. Here are some benefits and applications of Bayesian statistics:

1. Incorporation of prior information: Unlike classical statistics, Bayesian statistics enables the explicit incorporation of prior knowledge or beliefs into the analysis. This is particularly valuable when dealing with limited data, as the prior information can help improve the accuracy of estimates.

2. Updated estimates: Bayesian methods provide posterior distributions, which represent updated beliefs after incorporating data. These distributions provide a more complete picture of uncertainty and allow for more nuanced interpretations compared to point estimates.

3. Flexibility and adaptability: Bayesian statistics can handle a wide range of statistical problems, including regression analysis, hypothesis testing, parameter estimation, and model selection. It offers a flexible and adaptive approach to modeling complex relationships and making predictions.

4. Data-driven decision making: Bayesian statistics provides a framework for decision analysis by evaluating the expected value of different decisions under uncertainty. This allows for informed decision making that takes into account both prior beliefs and observed data.

5. Small sample sizes: Bayesian methods can be especially useful when data is limited, as they allow for the use of prior information to compensate for the lack of data. This makes it particularly relevant in scientific research when data collection may be expensive, time-consuming, or ethically constrained.

6. Handling missing data: Bayesian statistics provides a principled approach for handling missing data, allowing for the use of all available information without introducing bias. This is achieved by imputing missing values based on the observed data and prior knowledge.

7. Hierarchical modeling: Bayesian statistics supports the modeling of complex hierarchical structures, where parameters at different levels are estimated simultaneously. This is particularly useful in analyzing data with grouped or nested structures, such as multi-level or longitudinal data.

8. Machine learning: Bayesian statistics has gained popularity in machine learning, as it provides a probabilistic framework for modeling and inference. It can be used for tasks such as classification, clustering, and recommendation systems.

Overall, Bayesian statistics offers a robust framework for statistical analysis that accommodates prior beliefs, updates estimates based on observed data, and allows for more informed decision making under uncertainty. Its flexibility, adaptability, and ability to handle small sample sizes make it a valuable tool in various fields, including medicine, finance, engineering, and social sciences.

Criticisms and Limitations of Bayesian Statistics

There are several criticisms and limitations of Bayesian statistics that should be taken into consideration when using this approach:

1. Subjectivity: One of the main criticisms of Bayesian statistics is its reliance on prior beliefs and subjective judgments. The choice of a prior distribution can greatly impact the results, and different individuals may have different priors, leading to varying conclusions. This subjectivity can introduce bias into the analysis.

2. Computational Complexity: Bayesian analysis often involves complex calculations, especially when dealing with large datasets or complex models. This computational complexity can make Bayesian statistics less practical and efficient, particularly when compared to simpler frequentist methods.

3. Dependence on Prior Information: The Bayesian approach heavily relies on incorporating prior information into the analysis. However, in many cases, reliable prior information might be limited or not available. This limitation can be especially challenging when analyzing new or rare events, where little or no prior information exists.

4. Sensitivity to Priors: The choice of prior distributions can have a significant impact on the posterior estimates. In some cases, the choice of prior can dominate the results, leading to different conclusions depending on the specific prior used. This sensitivity to priors can make the interpretation of Bayesian results more difficult and less reliable.

5. Lack of Robustness: Bayesian statistics can be sensitive to outliers or extreme values in the data. Small changes in the data can lead to substantial changes in the posterior estimates, which may not be desirable, particularly when dealing with noisy or uncertain data.

6. Limited Use in Hypothesis Testing: Bayesian statistics are often critiqued for the lack of a formal hypothesis testing framework. Unlike frequentist statistics that provide p-values and confidence intervals, Bayesian statistics typically focus on estimating posterior probabilities, making it less straightforward to conduct hypothesis tests.

7. Data Combination Challenges: Bayesian statistics can face challenges in combining data from multiple sources or studies. Incorporating different prior distributions and accounting for potential biases or heterogeneity in the data can be challenging, and the results may be sensitive to the specific methods used.

It is important to recognize these criticisms and limitations when using Bayesian statistics and to carefully consider their potential impact on the analysis and interpretation of results.

Topics related to Bayesian Statistics

[Introduction] Interpretation of probability and Bayes' rule part 1 – YouTube

[Introduction] Interpretation of probability and Bayes' rule part 1 – YouTube

Bayesian statistics syllabus – YouTube

Bayesian statistics syllabus – YouTube

Bayesian vs frequentist statistics – YouTube

Bayesian vs frequentist statistics – YouTube

Bayes theorem, the geometry of changing beliefs – YouTube

Bayes theorem, the geometry of changing beliefs – YouTube

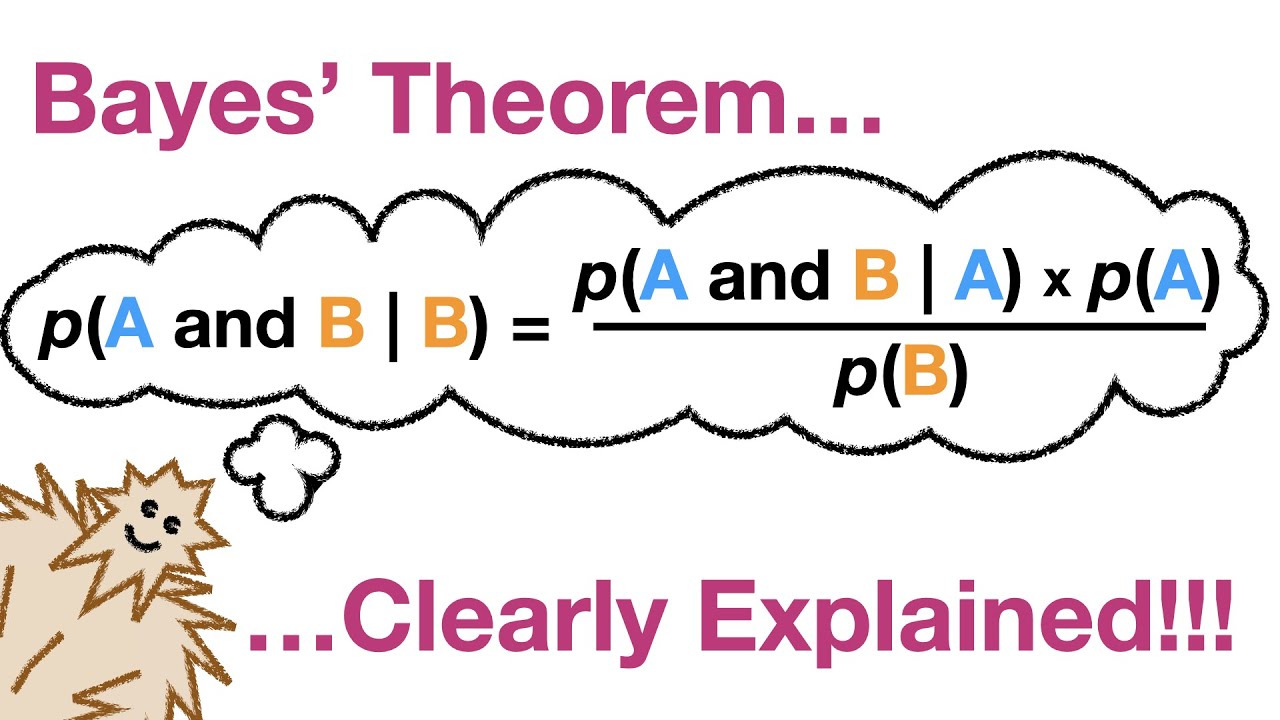

Bayes' Theorem, Clearly Explained!!!! – YouTube

Bayes' Theorem, Clearly Explained!!!! – YouTube

The Bayesian Trap – YouTube

The Bayesian Trap – YouTube

You Know I’m All About that Bayes: Crash Course Statistics #24 – YouTube

You Know I’m All About that Bayes: Crash Course Statistics #24 – YouTube

Bayesian Statistics with Hannah Fry – YouTube

Bayesian Statistics with Hannah Fry – YouTube

Bayes' Theorem – The Simplest Case – YouTube

Bayes' Theorem – The Simplest Case – YouTube

Introduction to Bayesian statistics, part 1: The basic concepts – YouTube

Introduction to Bayesian statistics, part 1: The basic concepts – YouTube

Peter Scholze is a distinguished German mathematician born on December 11, 1987. Widely recognized for his profound contributions to arithmetic algebraic geometry, Scholze gained international acclaim for his work on perfectoid spaces. This innovative work has significantly impacted the field of mathematics, particularly in the study of arithmetic geometry. He is a leading figure in the mathematical community.