Introduction to eigenvalues

Introduction to Eigenvalues:

Eigenvalues are fundamental concepts in linear algebra and have applications in various fields of mathematics and science. They are used to represent certain important characteristics of matrices and linear transformations.

In simple terms, eigenvalues are the values that a matrix or a linear transformation possesses such that when the matrix is multiplied by a vector, the result is a scalar multiple of the same vector. This might sound complicated, but it is easier to understand with an example.

Consider a matrix A and a vector v. If we have Av = λv, where λ is an eigenvalue of A and v is the corresponding eigenvector, then we can say that when the matrix A acts on the vector v, it simply stretches or scales the vector by a factor of λ.

Eigenvalues provide valuable information about the behavior and properties of matrices and linear transformations. They help in determining important characteristics such as the stability of systems, the rank of a matrix, the performance of algorithms, and the behavior of dynamical systems.

Finding eigenvalues involves solving a characteristic equation, which is obtained by subtracting λI (λ times the identity matrix) from the original matrix A and setting its determinant equal to zero. The solutions to this equation will give us the eigenvalues of the matrix A. Once eigenvalues are obtained, we can find the corresponding eigenvectors by substituting each eigenvalue back into the equation Av = λv and solving for v.

Eigenvalues play a crucial role in many areas such as physics, engineering, computer science, data analysis, and quantum mechanics. They provide insights into the behavior of complex systems and are extensively used in applications like image processing, signal processing, machine learning, and network analysis.

In conclusion, eigenvalues are important mathematical concepts that help us understand the behavior and properties of matrices and linear transformations. They have widespread applications in various fields and their study is essential for advanced mathematical and scientific analysis.

Definition and properties of eigenvalues

Eigenvalues are a concept in linear algebra that are used to describe certain properties and behaviors of a linear transformation or a matrix. Specifically, they are the values that, when multiplied by a given vector, result in a scaled version of that vector.

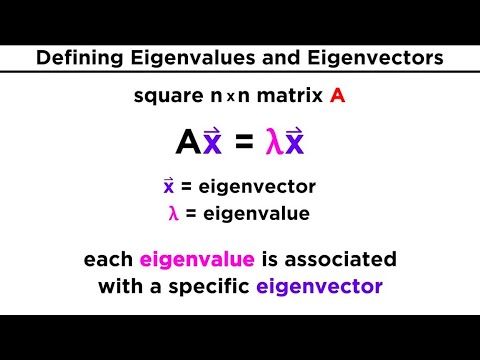

More formally, let A be an n x n matrix. An eigenvalue of A is a scalar λ such that there exists a non-zero vector x for which the equation Ax = λx holds true. The vector x is called an eigenvector corresponding to the eigenvalue λ.

Eigenvalues and eigenvectors have several important properties:

1. Multiplicity: An eigenvalue may have a multiplicity greater than 1, which represents the number of linearly independent eigenvectors associated with that eigenvalue.

2. Determinant and Trace: The sum of all eigenvalues of a square matrix is equal to its trace (the sum of the elements on its main diagonal) and the product of its eigenvalues is equal to its determinant.

3. Similarity Transformation: If two matrices A and B are similar (A = PBP^(-1) for some invertible matrix P), then they have the same eigenvalues.

4. Diagonalization: A matrix A is diagonalizable if it can be expressed as A = PDP^(-1), where D is a diagonal matrix whose diagonal entries are the eigenvalues of A, and P is a matrix formed by the corresponding eigenvectors.

5. Spectral Theorem: For a symmetric matrix, all eigenvalues are real numbers, and the corresponding eigenvectors are orthogonal.

Eigenvalues and eigenvectors have numerous applications in various fields, including physics, engineering, computer science, and data analysis. They help in understanding the behavior of linear transformations, solving systems of linear equations, analyzing the stability of dynamic systems, and performing dimensionality reduction techniques like Principal Component Analysis (PCA).

Applications of eigenvalues

Eigenvalues have numerous applications in various fields. Some of the most common applications include:

1. Physics: In quantum mechanics, eigenvalues represent the possible energy levels of a system. The eigenvalues of the Hamiltonian operator determine the energy states of a quantum system.

2. Engineering: Eigenvalues are important in structural analysis to determine the natural frequencies and modes of vibration of a physical structure. They help engineers understand the dynamic behavior and stability of systems such as bridges, buildings, and aircraft.

3. Computer Science: Eigenvalues find applications in machine learning and data analysis, particularly in dimensionality reduction techniques like Principal Component Analysis (PCA). Eigenvalues are used to identify the most important components of high-dimensional data and reduce its dimensionality for further analysis.

4. Image and Signal Processing: Eigenvalues play a key role in image compression and feature extraction. Techniques like Singular Value Decomposition (SVD) use eigenvalues to identify the most significant features or patterns in an image or signal.

5. Control Systems: Eigenvalues are used in control theory to analyze the stability and response characteristics of dynamic systems. They help determine whether a system will oscillate, decay, or grow over time, which is crucial in designing control strategies for devices and processes.

6. Graph Theory: Eigenvalues are used in graph theory to analyze connectivity and centrality measures. For example, the eigenvalues of the adjacency matrix provide information about the connectivity of a network, while the eigenvectors can be used to identify the most influential nodes.

7. Economics: Eigenvalues find applications in economics for analyzing input-output models, studying economic networks, and understanding the stability of economic systems.

8. Environmental Science: Eigenvalues are used to analyze ecological networks and understand the stability and resilience of ecosystems.

These are just a few examples of the vast range of applications of eigenvalues. They are a fundamental concept used in various scientific and technical disciplines, providing insights into the behavior, structure, and properties of complex systems.

Eigenvalues in linear algebra

In linear algebra, eigenvalues are a fundamental concept used to understand the properties of linear transformations or matrices.

Given a linear transformation T, an eigenvalue of T is a scalar λ for which there exists a non-zero vector v such that T(v) = λv. In simpler terms, it means that when the linear transformation is applied to a vector, the result is a scaled version of the same vector. The vector v is called an eigenvector corresponding to the eigenvalue λ.

Mathematically, we can represent the eigenvalue equation as:

T(v) = λv

This equation can also be written in matrix form as:

A · v = λ · v

where A is the matrix representation of the linear transformation T.

To find the eigenvalues of a matrix or linear transformation, we solve the characteristic equation, which is obtained by subtracting λI (λ times the identity matrix) from the matrix A:

det(A – λI) = 0

The solutions to this equation are the eigenvalues of A.

Eigenvalues play a crucial role in understanding the behavior of linear transformations and matrices. They provide insights into properties such as the stretch or contraction of vectors, transformation directions, and determining whether a matrix is invertible or singular. Moreover, eigenvalues and eigenvectors are used in diagonalization, determining the rank, and in various applications of linear algebra in fields such as physics, engineering, and computer science.

Importance of eigenvalues in mathematics

Eigenvalues play a crucial role in various branches of mathematics, including linear algebra, differential equations, and numerical analysis. They provide valuable information about the properties and behavior of matrices and operators, allowing us to understand and solve a wide range of mathematical and real-world problems. Here are some key aspects highlighting the importance of eigenvalues:

1. Matrix Diagonalization: The eigenvalues of a matrix are used to diagonalize it, i.e., transform it into a diagonal matrix using an appropriate change of basis. Diagonal matrices have several advantages, such as simplifying calculations and representing certain linear transformations explicitly.

2. Stability Analysis: In the study of differential equations, eigenvalues are used to determine the stability and behavior of equilibrium points or solutions. By analyzing the eigenvalues of a linearized system, stability properties, such as asymptotic stability or instability, can be determined.

3. Eigenvalue Problems: Eigenvalues play a central role in solving eigenvalue problems, where the goal is to find the eigenvalues and corresponding eigenvectors of a given matrix or operator. These problems arise in various fields, including quantum mechanics, vibration analysis, optimization, and data analysis.

4. Spectral Theory: Eigenvalues are fundamental to the spectral theory, which studies the properties of operators and matrices in relation to their eigenvalues. Spectral theory is used in diverse areas such as quantum mechanics, functional analysis, and partial differential equations.

5. Matrix Analysis: Eigenvalues provide important information about the characteristics of matrices. For example, the eigenvalues of a square matrix determine its determinant, trace, rank, and invertibility. Moreover, various matrix properties, such as positive definiteness or norm bounds, can be derived from their eigenvalues.

6. Numerical Methods: Eigenvalues play a crucial role in numerical analysis and computation. Efficient algorithms have been developed to approximate eigenvalues and eigenvectors, enabling numerical solutions to many problems, such as finding the dominant eigenvalues in large matrices or performing principal component analysis in data analysis.

Overall, eigenvalues are a fundamental concept in linear algebra and serve as a valuable tool in studying and solving mathematical problems in various fields. Their applications range from basic matrix analysis to advanced topics in physics, engineering, computer science, and data analysis.

Topics related to Eigenvalue

Eigenvectors and eigenvalues | Chapter 14, Essence of linear algebra – YouTube

Eigenvectors and eigenvalues | Chapter 14, Essence of linear algebra – YouTube

Finding Eigenvalues and Eigenvectors – YouTube

Finding Eigenvalues and Eigenvectors – YouTube

Math Made Easy by StudyPug! F3.0.0ve – YouTube

Math Made Easy by StudyPug! F3.0.0ve – YouTube

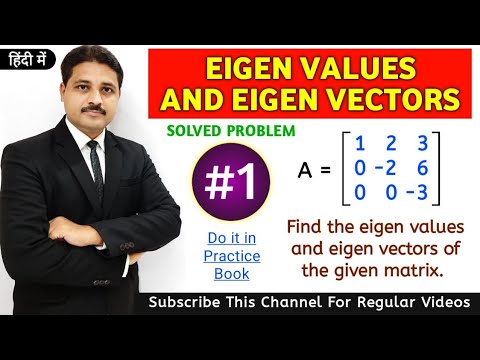

EIGEN VALUES AND EIGEN VECTORS IN HINDI SOLVED PROBLEM 1 IN MATRICES @TIKLESACADEMY – YouTube

EIGEN VALUES AND EIGEN VECTORS IN HINDI SOLVED PROBLEM 1 IN MATRICES @TIKLESACADEMY – YouTube

EIGEN VALUES AND EIGEN VECTORS IN HINDI SOLVED PROBLEM 2 IN MATRICES @TIKLESACADEMY – YouTube

EIGEN VALUES AND EIGEN VECTORS IN HINDI SOLVED PROBLEM 2 IN MATRICES @TIKLESACADEMY – YouTube

EIGEN VALUES & EIGEN VECTORS | MATRICES | S-1 | ENGINEERING MATHS | ENGINEERING SECOND YEAR – YouTube

EIGEN VALUES & EIGEN VECTORS | MATRICES | S-1 | ENGINEERING MATHS | ENGINEERING SECOND YEAR – YouTube

EIGEN VALUES & EIGEN VECTORS | MATRICES | S-2 | ENGINEERING MATHS | ENGINEERING SECOND YEAR – YouTube

EIGEN VALUES & EIGEN VECTORS | MATRICES | S-2 | ENGINEERING MATHS | ENGINEERING SECOND YEAR – YouTube

Eigen Values and Eigen Vectors in HINDI { 2022} | Matrices – YouTube

Eigen Values and Eigen Vectors in HINDI { 2022} | Matrices – YouTube

21. Eigenvalues and Eigenvectors – YouTube

21. Eigenvalues and Eigenvectors – YouTube

The applications of eigenvectors and eigenvalues | That thing you heard in Endgame has other uses – YouTube

The applications of eigenvectors and eigenvalues | That thing you heard in Endgame has other uses – YouTube

Peter Scholze is a distinguished German mathematician born on December 11, 1987. Widely recognized for his profound contributions to arithmetic algebraic geometry, Scholze gained international acclaim for his work on perfectoid spaces. This innovative work has significantly impacted the field of mathematics, particularly in the study of arithmetic geometry. He is a leading figure in the mathematical community.