Introduction to Linear Algebra

Linear algebra is a branch of mathematics that deals with vector spaces and linear equations. It involves the study of finite-dimensional vector spaces and linear transformations between them. Linear algebra plays a crucial role in various areas of mathematics, as well as in fields such as physics, engineering, computer science, and economics.

At its core, linear algebra focuses on the concept of linear equations and their solutions. A linear equation can be represented as an equation of the form Ax = b, where A is a matrix, x is a vector of unknowns, and b is a vector representing constants. The goal is to find a solution x that satisfies the equation. Linear algebra provides tools and methods to solve these systems of linear equations efficiently.

One of the fundamental concepts in linear algebra is that of a vector space. A vector space is a set of vectors that satisfy certain properties, such as closure under addition and scalar multiplication. Vectors can be geometrically represented as directed line segments with magnitude and direction. Vector spaces serve as a foundation for understanding higher-dimensional spaces and provide a framework for solving a wide range of mathematical problems.

Linear transformations are another important concept in linear algebra. A linear transformation is a function that preserves the structure of vector spaces. It maps vectors from one vector space to another and preserves the properties of addition and scalar multiplication. Linear transformations are represented by matrices, and their properties can be analyzed using techniques and theorems from linear algebra.

The study of linear algebra is not limited to vectors and matrices. It also involves topics such as eigenvalues and eigenvectors, determinants, inner product spaces, and diagonalization. Eigenvalues and eigenvectors play a crucial role in understanding the behavior of linear transformations, while determinants provide a measure of the invertibility of matrices. Inner product spaces introduce notions of length and angle in vector spaces, and diagonalization allows for simplification and analysis of certain matrices.

Overall, linear algebra provides a powerful mathematical framework for solving systems of linear equations, understanding transformations of vector spaces, and analyzing properties of matrices. Its applications are widespread, from computer graphics to data analysis, making it a foundational subject for many branches of science and engineering.

Basic Concepts and Operations in Linear Algebra

Linear algebra is a branch of mathematics that deals with the study of vectors, vector spaces, linear transformations, and systems of linear equations. It provides a powerful framework for solving problems in many areas of science, engineering, and finance.

Below are some basic concepts and operations in linear algebra:

1. Vectors: A vector is a quantity that has both magnitude and direction. It can be represented as an ordered list of numbers, called its components, or as a column matrix. Vectors can be added, subtracted, and multiplied by scalars.

2. Vector Spaces: A vector space is a set of vectors that is closed under vector addition and scalar multiplication. It satisfies certain properties, such as the existence of a zero vector and additive inverses. Examples of vector spaces include the set of all real numbers R, the set of all n-dimensional vectors R^n, and the set of all polynomials of degree at most n.

3. Linear Transformations: A linear transformation is a function between vector spaces that preserves vector addition and scalar multiplication. It can be represented by a matrix multiplication. Examples of linear transformations include rotations, scalings, and projections.

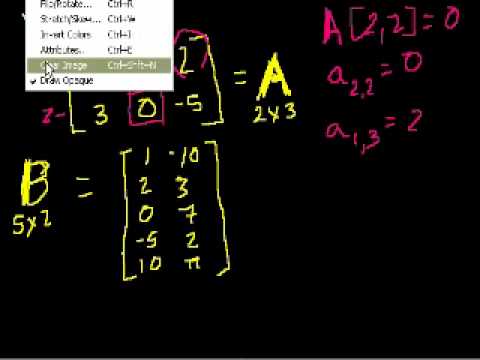

4. Matrices: A matrix is a rectangular array of numbers. Matrices can be added, subtracted, and multiplied. The dimensions of a matrix are given by the number of rows and columns it has. An m x n matrix has m rows and n columns.

5. Systems of Linear Equations: A system of linear equations is a set of equations involving linear functions of the same variables. Solutions to the system are values of the variables that satisfy all the equations simultaneously. Systems of linear equations can be solved using methods such as Gaussian elimination, matrix inversion, and Cramer’s rule.

6. Eigenvalues and Eigenvectors: An eigenvalue of a matrix is a scalar that, when multiplied by an eigenvector, gives a new vector that is parallel to the original eigenvector. Eigenvectors and eigenvalues are important in the study of linear transformations, as they represent the directions and magnitudes of stretching or shrinking under the transformation.

7. Dot Product: The dot product is an operation defined on vectors that measures the similarity or orthogonality between them. The dot product of two vectors is the sum of the products of their corresponding components. It is also related to the angle between the vectors.

These are just a few of the fundamental concepts and operations in linear algebra. Understanding and utilizing these concepts can help in solving problems in various fields such as physics, computer science, and data analysis.

Applications of Linear Algebra

Linear algebra is a fundamental branch of mathematics that deals with the study of vector spaces, linear equations, and linear transformations. It has numerous applications in various fields, including:

1. Engineering: Linear algebra is heavily used in engineering disciplines such as electrical, mechanical, civil, and aerospace engineering. It is used to solve systems of linear equations, analyze circuits, model mechanical systems, and solve optimization problems.

2. Computer Science: Linear algebra is central to computer graphics, image processing, machine learning, and data analysis. It is used to represent and manipulate multidimensional data, build algorithms for image recognition, perform dimensionality reduction, and solve systems of linear equations in computer simulations.

3. Physics: Linear algebra plays a significant role in physics, particularly in quantum mechanics. It is used to describe the behavior of quantum systems, understand wave functions, and analyze quantum states and operators.

4. Economics: Linear algebra is employed in economics to model and solve optimization problems, analyze market equilibria, and study input-output analysis. It is used in economic models that involve large systems of linear equations, such as linear programming and game theory.

5. Cryptography: Linear algebra is crucial in various aspects of cryptography, especially in encryption algorithms. It is used to perform mathematical operations on large numbers, modular arithmetic, and finite field theory, which underpin the security of cryptographic systems.

6. Data Science: Linear algebra forms the basis for many data science techniques and algorithms. It is used in machine learning algorithms like linear regression, principal component analysis (PCA), and support vector machines (SVM). It provides the tools to analyze and manipulate high-dimensional datasets efficiently.

7. Statistics: Linear algebra is used in statistical analysis to solve systems of equations, estimate parameters in regression models, and perform dimensionality reduction. It provides the mathematical foundation for statistical techniques like linear regression, analysis of variance (ANOVA), and multivariate analysis.

8. Image and Signal Processing: Linear algebra is used extensively in image and signal processing applications. It enables operations such as filtering, compression, and noise reduction. Techniques like Fourier analysis and wavelet transforms are based on linear algebra principles.

These are just a few examples of the vast range of applications that linear algebra has in various fields. Its ability to model and solve complex problems using algebraic structures makes it a valuable tool in many disciplines.

Linear Algebra in Computer Science and Engineering

Linear algebra plays a critical role in computer science and engineering. It provides a framework for solving complex problems and modeling real-world phenomena computationally. Here are some key areas where linear algebra is applied:

1. Computer Graphics: In computer graphics, linear algebra is fundamental for transforming and manipulating objects in 2D and 3D space. Matrices and vectors are used to represent geometric transformations, such as translation, rotation, scaling, and projection. Techniques like ray tracing and shadow rendering also rely on linear algebra calculations.

2. Machine Learning: Linear algebra is essential in machine learning as it provides the foundation for many algorithms. Matrices are used to represent datasets, where each row corresponds to a data point and each column represents a feature. Operations like matrix multiplication, matrix factorization, and eigendecomposition are used for tasks like regression, dimensionality reduction, and clustering.

3. Computer Vision: Computer vision systems rely on linear algebra to process and analyze visual data. Matrices can represent images, where each element corresponds to a pixel’s intensity or color values. Techniques like image filtering, object recognition, image stitching, and motion tracking involve matrix operations like convolution, matrix inversion, and matrix decompositions.

4. Network Analysis: Linear algebra is utilized in the analysis of networks and graph structures. Matrices are employed to represent adjacency matrices, where each entry indicates the presence or absence of a connection between two nodes. Techniques like graph traversal, centrality analysis, and community detection involve operations like matrix power, eigenanalysis, and singular value decomposition.

5. Signal Processing: Linear algebra is integral in signal processing tasks like audio and image compression, noise reduction, and filtering. Matrices and vectors can represent signals where each element represents a sampled value. Techniques like Fourier transform, linear filtering, and eigenanalysis are commonly used to process and analyze signals.

6. Optimization: Linear algebra provides the foundation for various optimization techniques used in computer science and engineering. Problems such as linear programming, regression analysis, and machine learning model fitting involve solving systems of linear equations or optimizing linear objective functions.

Overall, linear algebra is a powerful mathematical tool that enables the analysis, representation, and manipulation of data and structures in computer science and engineering, making it an essential subject for students in these fields.

Advanced Topics in Linear Algebra

Advanced Topics in Linear Algebra refer to specific areas or concepts that build upon the foundation of basic linear algebra. These topics delve into more complex and abstract areas of linear algebra and often involve the study of vector spaces, linear transformations, and matrices.

Some advanced topics in linear algebra include:

1. Vector Spaces: This topic explores the properties and structures of vector spaces in more detail. It involves studying concepts such as subspaces, bases, dimension, linear independence, and linear combinations.

2. Inner Product Spaces: Inner product spaces extend the concept of vector spaces and introduce an inner product or dot product. Inner products allow for the concept of angles and lengths of vectors, as well as notions of orthogonality and orthogonality bases.

3. Orthogonalization and Gram-Schmidt Process: The Gram-Schmidt process is a technique used to orthogonalize a set of vectors within an inner product space. It is often used to find an orthogonal basis for a subspace.

4. Eigenvalues and Eigenvectors: Eigenvalues and eigenvectors are fundamental concepts in linear algebra. Advanced topics in this area include the study of diagonalization, eigenspaces, and the spectral theorem.

5. Matrix Decompositions: These topics involve decomposing matrices into simpler forms, such as diagonal matrices, triangular matrices, or singular value decomposition. Decompositions are useful for solving systems of linear equations, analyzing the properties of matrices, and performing computations efficiently.

6. Linear Transformations and their Representations: Advanced topics often involve studying linear transformations between vector spaces and their representations in matrix form. This includes the concept of dual spaces, transpose and adjoint transformations, and the study of linear functionals.

7. Tensor Algebra: Tensor algebra extends the notions of vector spaces and matrices into multidimensional arrays. This topic explores concepts such as tensor products, contractions, and multilinear mappings.

8. Linear Programming: Linear programming involves optimizing a linear objective function, subject to a set of linear constraints. Advanced linear programming techniques often rely on linear algebra tools, such as matrix manipulations and the theory of convex sets.

9. Applications in Data Science and Machine Learning: Linear algebra plays a crucial role in data science and machine learning. Advanced topics in this area include dimensionality reduction techniques (e.g., Singular Value Decomposition), matrix factorization methods for recommendation systems, and matrix calculus for optimization algorithms.

These are just some of the advanced topics in linear algebra. The field is broad, and there are many other specialized areas and applications.

Topics related to Linear Algebra

Linear Algebra 1.1.1 Systems of Linear Equations – YouTube

Linear Algebra 1.1.1 Systems of Linear Equations – YouTube

Linear Algebra 1.1.2 Solve Systems of Linear Equations in Augmented Matrices Using Row Operations – YouTube

Linear Algebra 1.1.2 Solve Systems of Linear Equations in Augmented Matrices Using Row Operations – YouTube

2. Elimination with Matrices. – YouTube

2. Elimination with Matrices. – YouTube

3. Multiplication and Inverse Matrices – YouTube

3. Multiplication and Inverse Matrices – YouTube

Math Made Easy by StudyPug! F3.0.0ac – YouTube

Math Made Easy by StudyPug! F3.0.0ac – YouTube

Vectors | Chapter 1, Essence of linear algebra – YouTube

Vectors | Chapter 1, Essence of linear algebra – YouTube

Mod-01 Lec-01 Introduction to the Course Contents. – YouTube

Mod-01 Lec-01 Introduction to the Course Contents. – YouTube

Mod-01 Lec-02 Linear Equations – YouTube

Mod-01 Lec-02 Linear Equations – YouTube

Introduction to matrices – YouTube

Introduction to matrices – YouTube

Matrix multiplication (part 1) – YouTube

Matrix multiplication (part 1) – YouTube

Peter Scholze is a distinguished German mathematician born on December 11, 1987. Widely recognized for his profound contributions to arithmetic algebraic geometry, Scholze gained international acclaim for his work on perfectoid spaces. This innovative work has significantly impacted the field of mathematics, particularly in the study of arithmetic geometry. He is a leading figure in the mathematical community.