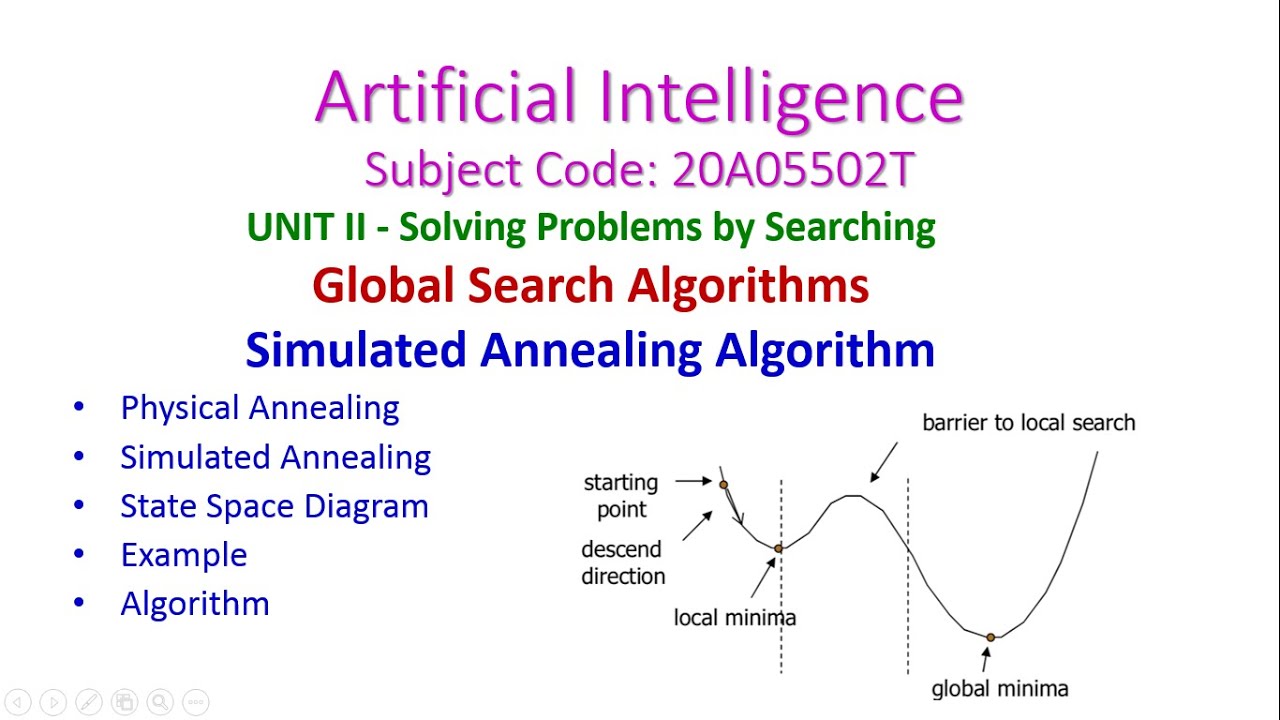

Introduction to Simulated Annealing

Simulated annealing is a probabilistic optimization algorithm that is inspired by the annealing process in metallurgy. It is commonly used to solve combinatorial optimization problems, where the goal is to find the optimal solution from a large set of possible solutions.

The algorithm works by maintaining a current solution and iteratively exploring the solution space in search of better solutions. It starts with an initial solution and then randomly perturbs it to generate a neighboring solution. The quality of this neighboring solution is evaluated using an objective function. If the neighboring solution is better than the current solution, it is accepted as the new current solution. However, if the neighboring solution is worse, it may still be accepted with a certain probability, allowing the algorithm to escape from local optima and explore other regions of the solution space.

The probability of accepting a worse solution is determined by a cooling schedule, which gradually reduces the acceptance probability as the algorithm progresses. This mimics the annealing process in metallurgy, where the temperature is gradually lowered to allow the metal to reach a more stable state.

Simulated annealing has been successfully applied to a wide range of optimization problems, including traveling salesman problem, graph coloring, and scheduling. It is particularly useful for problems where finding the global optimum is difficult due to the presence of multiple local optima.

Overall, simulated annealing is a powerful optimization algorithm that can help find near-optimal solutions to complex problems. By combining random exploration with probabilistic acceptance of worse solutions, it offers a balance between exploration and exploitation, making it a versatile technique for solving optimization problems.

Principles and Concepts of Simulated Annealing

Simulated annealing is a metaheuristic optimization algorithm inspired by the annealing process in metallurgy. It is used to find good solutions to combinatorial optimization problems where an exhaustive search is not possible or practical.

The basic principle of simulated annealing is based on the concept of exploring the search space by iteratively adjusting the current solution. The algorithm begins with an initial solution, which can be randomly generated or provided by a heuristic method.

Simulated annealing mimics the physical annealing process of slowly cooling a material to relieve its internal stresses. Similarly, in simulated annealing, the algorithm introduces random perturbations to the current solution and gradually decreases the amount of randomness as the algorithm proceeds.

The algorithm works by iteratively evaluating the current solution and comparing it with a new solution generated by making a small change to the current solution. If the new solution is better, it is accepted as the new current solution. However, if the new solution is worse, it is accepted with a probability that depends on a temperature parameter and a measure of the difference in quality between the two solutions. This probabilistic acceptance allows the algorithm to escape local optima and explore the search space more extensively.

The temperature parameter is initially high, causing the algorithm to accept worse solutions more often. As the algorithm progresses, the temperature gradually decreases, reducing the probability of accepting worse solutions. This cooling process helps the algorithm converge towards a near-optimal solution.

Simulated annealing uses a cooling schedule to control the temperature reduction rate. The cooling schedule determines how quickly or slowly the temperature decreases over time. A good cooling schedule balances the need for exploration in the early stages of the algorithm with the need for exploitation in the later stages to refine the solution.

Overall, the principles and concepts of simulated annealing make it a powerful optimization technique for solving complex problems with large search spaces where finding the global optimum is challenging. By combining random exploration with a controlled acceptance of worse solutions, simulated annealing can efficiently identify good solutions within a reasonable amount of time.

Steps Involved in Simulated Annealing Algorithm

The Simulated Annealing algorithm is an optimization technique inspired by the annealing process in metallurgy. It is used to find near-optimal solutions to optimization problems, particularly in situations where finding the exact global optimum is computationally expensive or infeasible. Here are the steps involved in the Simulated Annealing algorithm:

1. Initialize the solution: Start by initializing an initial solution to the optimization problem. This solution can be randomly generated or based on some heuristic. The initial solution is represented by a set of decision variables.

2. Set the initial temperature: The algorithm uses a temperature parameter, which determines the chance of accepting suboptimal solutions. The higher the temperature, the greater the probability of accepting suboptimal solutions. The initial temperature should be set high, allowing for a wide exploration of the search space.

3. Iteration process: The algorithm iterates through a series of steps until a stopping criterion is met. The steps include:

a. Generate a neighboring solution: Move from the current solution to a neighboring solution by perturbing the decision variables. This perturbation can be random or guided by some specific rules.

b. Evaluate the objective function: Calculate the objective function value for the new solution, which indicates the quality of the solution. The objective function quantifies the performance measure to be optimized.

c. Accept or reject the new solution: Compare the objective function values of the current and the new solution. If the new solution is better (has a smaller value for minimization or a larger value for maximization), accept it as the current solution. Otherwise, accept it with a certain probability depending on the current temperature and the difference between the objective function values. This is the key step that allows suboptimal solutions to be accepted, aiding in escaping local optima.

d. Update the temperature: After every iteration, decrease the temperature according to a predetermined cooling schedule. The cooling schedule determines how fast the temperature decreases, controlling the exploration-exploitation trade-off. As the temperature decreases, the algorithm becomes more selective in accepting suboptimal solutions.

4. Stopping criterion: The iteration process stops when a predefined stopping criterion is met. This criterion could be a maximum number of iterations, a target objective function value, or a certain level of solution improvement.

5. Return the best solution: Once the stopping criterion is met, return the best solution found throughout the entire process.

The Simulated Annealing algorithm provides a balance between exploring the search space and exploiting promising areas, allowing for a more comprehensive exploration and increasing the probability of finding near-optimal solutions to optimization problems.

Applications of Simulated Annealing in Mathematics

Simulated Annealing is a popular optimization algorithm that can be applied to various mathematical problems. Some of the common applications of Simulated Annealing in mathematics include:

1. Optimization problems: Simulated Annealing can be used to solve optimization problems, where the goal is to find the best solution among a set of possible solutions. It can be applied to problems such as finding the maximum or minimum of a mathematical function, determining the best allocation of resources, or finding the optimal route in a traveling salesman problem.

2. Graph theory problems: Simulated Annealing can be used to solve problems in graph theory, such as finding the maximum or minimum matching in a graph, determining the chromatic number of a graph, or finding the Hamiltonian cycle in a graph.

3. Global optimization: Simulated Annealing can be used to search for global optima in multi-modal optimization problems, where the objective function may have multiple local optima. It can help to avoid getting stuck in local optima by allowing occasional uphill moves based on a random selection process.

4. Machine learning and neural networks: Simulated Annealing can be used to train neural networks and optimize their weights and biases. By iteratively adjusting these parameters, Simulated Annealing helps to find the optimal configuration that minimizes the error between predicted and actual outcomes.

5. Sudoku puzzles: Simulated Annealing can be applied to solve Sudoku puzzles by treating the puzzle as a constraint satisfaction problem. By iteratively filling in empty cells and adjusting the values to satisfy the given constraints, Simulated Annealing can find a solution that satisfies all the puzzle’s requirements.

Overall, Simulated Annealing is a versatile algorithm that can be applied to various mathematical problems, particularly in optimization and search spaces with complex, multi-modal landscapes. Its ability to find near-optimal solutions makes it a valuable tool in many areas of mathematics.

Advantages and Limitations of Simulated Annealing

Simulated annealing is a widely used optimization algorithm inspired by the annealing process in metallurgy. It is particularly effective in finding near-optimal solutions for complex problems. However, simulated annealing also has its limitations. Let’s discuss the advantages and limitations of this algorithm.

Advantages of Simulated Annealing:

1. Escape Local Optima: Simulated annealing is capable of escaping local optima, which are suboptimal solutions that have lower objective function values compared to the global optimum. This is achieved by allowing occasional uphill moves during the search, giving the algorithm the ability to explore a broader solution space.

2. Metaheuristic Nature: Simulated annealing does not make any assumptions about the problem being solved and does not require any specific problem structure. It can be applied to a wide range of combinatorial optimization problems, including those with non-linear or non-differentiable objective functions.

3. Flexible Search Strategy: The algorithm can be easily adapted to different problem domains by changing the cooling schedule, which controls the rate at which the algorithm explores the search space. This flexibility allows simulated annealing to be used in different contexts and problem scenarios.

4. Converges to Global Optimum: Given enough time, simulated annealing will converge to the global optimum. Unlike other local search algorithms, it is not affected by the initial solution and has the potential to find the best solution in the search space.

Limitations of Simulated Annealing:

1. Time and Resource Intensive: Simulated annealing can be computationally expensive, especially for large-scale problems or problems with a large number of decision variables. The algorithm requires a sufficiently long exploration time to ensure convergence to the global optimum.

2. Suboptimal Solutions: Although simulated annealing can escape local optima, it may still converge to a suboptimal solution, particularly if the cooling schedule is not properly set or if the exploration time is limited. The quality of the solution obtained is dependent on the chosen parameters and the problem itself.

3. Difficulty in Parameter Tuning: Properly tuning the parameters, such as the cooling schedule and the acceptance probability function, can be challenging. An inappropriate choice of parameters may lead to slow convergence, premature freezing, or poor exploration of the solution space.

4. Lack of Theoretical Guarantee: Simulated annealing does not guarantee finding the optimal solution within a specific number of iterations or time. The algorithm explores the search space in a probabilistic manner, and the convergence to the global optimum is not guaranteed. Therefore, it is not suitable for applications requiring guaranteed optimality.

In summary, simulated annealing offers advantages such as escaping local optima, being applicable to a wide range of problems, and having a flexible search strategy. However, it also has limitations regarding computational expense, potential for suboptimal solutions, difficulty in parameter tuning, and lack of theoretical guarantee of optimality. Understanding these advantages and limitations is crucial when deciding whether to use simulated annealing for a particular optimization problem.

Topics related to Simulated Annealing

Lecture 36: Simulated Annealing – YouTube

Lecture 36: Simulated Annealing – YouTube

The simulated annealing algorithm explained with an analogy to a toy – YouTube

The simulated annealing algorithm explained with an analogy to a toy – YouTube

Simulated Annealing: A Solved Example – YouTube

Simulated Annealing: A Solved Example – YouTube

Simulated Annealing Explained By Solving Sudoku – Artificial Intelligence – YouTube

Simulated Annealing Explained By Solving Sudoku – Artificial Intelligence – YouTube

Simulated Annealing – YouTube

Simulated Annealing – YouTube

Simulated Annealing-Artificial Intelligence-Unit-2-Global Search Algorithms-20A05502T – YouTube

Simulated Annealing-Artificial Intelligence-Unit-2-Global Search Algorithms-20A05502T – YouTube

L32: Simulated Annealing in Artificial Intelligence | Difference Hill Climbing & Simulated Annealing – YouTube

L32: Simulated Annealing in Artificial Intelligence | Difference Hill Climbing & Simulated Annealing – YouTube

Simulated Annealing illustration – YouTube

Simulated Annealing illustration – YouTube

Deep Learning Cars – YouTube

Deep Learning Cars – YouTube

Artificial intelligence 19 Hill Climbing Search Algorithm in ai | lecture |tutorial| sanjaypathakjec – YouTube

Artificial intelligence 19 Hill Climbing Search Algorithm in ai | lecture |tutorial| sanjaypathakjec – YouTube

Peter Scholze is a distinguished German mathematician born on December 11, 1987. Widely recognized for his profound contributions to arithmetic algebraic geometry, Scholze gained international acclaim for his work on perfectoid spaces. This innovative work has significantly impacted the field of mathematics, particularly in the study of arithmetic geometry. He is a leading figure in the mathematical community.